A Profound Shift

Story by Ellen Marks Photos by Roberto E. Rosales

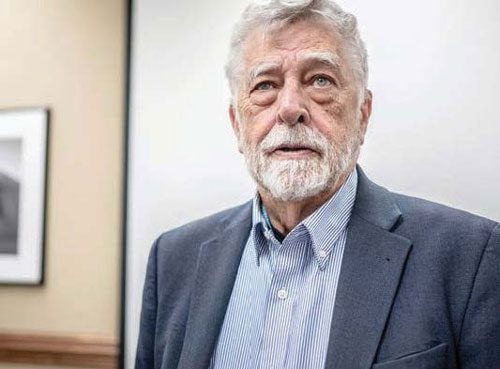

It’s an arcane question, and in the distant past it would have taken Perkins — a professor of medicine at UNM — a day or two to scour the literature and find an answer. In the near past, he could have turned to Google or another search engine to call up a list of possible answers, follow the links and try to determine which was accurate.

Instead, it took him 15 seconds using ChatGPT-4, an advanced artificial intelligence instrument that scours multiple sources to collate an answer, in this case 223.

It’s a tool that has people both fearing its doomsday potential and hailing it as a revolutionary step in education, research, ethics and likely every field of study at UNM.

“Some people are just despondent, thinking this is terrible,” says Leo Lo, dean of UNM’s College of University Libraries and Learning Sciences. “Some are optimistic, like me. Some feel it’s not ready for prime time, and that it has a lot of flaws. But the fact is it’s already here. Education will be changed forever because this thing happened.”

Like universities everywhere, UNM is struggling to understand what the world of generative AI will bring and how to best prepare for it and use it to its best advantage. And it’s doing so as the technology undergoes constant and rapid change.

“We’re trying to run as fast as we can,” says Perkins, co-director of the medical school’s MD/PhD program and board president of the state’s Bioscience Authority. “It’s changing as we talk. By the time we hang up, it will probably be different.”

UNM is among 19 universities in the United States and Canada that are participating in a two-year research project to prepare for advanced technology. The work is being done under the auspices of Ithaka S+R, a not-for- profit research group.

“…the fact is it’s already here. Education will be changed forever because this thing happened.”

Dean Leo Lo

Dean Leo Lo

Adrian Faust

Todd Quinn

There are currently no university-wide guidelines at UNM, other than the standard prohibitions against plagiarism and cheating, says Todd Quinn, an Ithaka team member and business and economics librarian in Libraries and Learning Sciences at UNM.

Generative AI is fundamentally different from basic artificial intelligence (think Siri or customer service chatbots) because it can create new content.

Melanie Moses, a UNM computer science and biology professor, describes it as “a new category of algorithms.”

“The basic technology hasn’t changed much in the last 50 years, but the impressive ability to generate new images and texts and videos, that’s really exploded in the last year,” says Moses, who runs a biological computation lab. “It is kind of astounding.”

The key to the explosion, says Moses, is new tools that rely on “billions and billions of lines of text to make predictions based on patterns in the past.”

Often, the results of a ChatGPT search can be so convincing that they are indistinguishable from what a human would create.

The technology has the potential for everything from addressing climate change or cybersecurity problems to finding cancerous tumors at a very early stage.

However, the tools, as they exist now, can yield grossly inaccurate results.

“Sometimes it just makes stuff up and then doubles down on its lies,” Moses says. “It can be quite misleading.” Lo pointed to an incident at Texas A&M University-Commerce this year in which an animal sciences instructor suspected at least some of his students had used ChatGPT to complete their work.

To test his theory, he fed some of his students’ writings into ChatGPT and asked it whether artificial intelligence had produced the work. The tool claimed credit for all of it and the instructor gave his entire class an “incomplete” grade.

Students unleashed an outcry, the university said it was investigating, and critics pointed out that ChatGPT is not considered a reliable resource for answering such a question. The university later said no students failed the class or were barred from graduating because of the issue.

“The tool is very good at synthesizing a lot of data but we’re seeing a lot of students getting fake citations” on research papers, says Lo, who is leading UNM’s six-member Ithaka team. “We have to tell them these don’t exist.”

Another difficult problem involves biases that plague the information found everywhere online. For example, a basic assumption is that doctors are men, so anything produced with generative AI is likely to have that bias, Moses says.

A case in point: An early AI model was asked to predict the winner of the 2016 presidential election. Contrary to what many polls had forecast, the model’s prediction was Republican Donald Trump over Democratic candidate Hillary Clinton.

“But then the question is why, and the why is, well, because men always win,” Perkins says. “Based on our culture, that’s correct, right? You could say it was pretty smart, but is it just reflecting, like a mirror?”

Moses points out that the gargantuan amounts of information that feed the intelligence tools can include outdated historical records, such as “books written in 1750.”

“Any stereotype you can come up with, these models tend to not just reflect them but exaggerate them,” Moses says. The internet is full of vile, violent kinds of text.

The internet, you might argue, represents the worst of humanity.” And what’s on the internet forms the building blocks for much of AI’s output.

Perkins says he offers a “positive spin” on the bias problem. Since our culture is full of biases anyway, he says, looking closely at what these new tools produce is another opportunity to examine humanity’s prejudices.

“It’s not like ChatGPT is making the biases up,” he says. “They’re in our culture, they’re in our data and in all these materials the (tools) have been trained on. It gives us another opportunity to confront them and address them and deal with them.”

And if there’s some resistance to adapting to the new AI reality, that’s probably to be expected, says Doug Ziedonis, MD, MPH, CEO of the UNM Health System and executive vice president of UNM Health Sciences.

Ziedonis, a psychiatrist, said he and other physicians chafed at the electronic health record when it was introduced years ago, and now accept it as part of medical practice.

“At a very high level, to me,” he says, “it’s not a question of if it’s going to happen. It’s happening. And I think that’s going to profoundly shift how we teach everybody.”

And how about what some say is the most frightening prospect of AI: that it could advance far enough to take over the world and make humans its slaves?

“It doesn’t keep me up at night,” says Moses. “My real concern with that way of thinking is that it puts the fear into something that’s in the distant future that no one feels like they can do anything about. It puts less attention on, we can make these algorithms better right now.”

But ask ChatGPT itself if it has any such apocalyptic plans, and the answer is an artificially intelligent “no way.”

“The idea of AI taking over the world is a common theme in science fiction,” the chatbot says. ”Generative AI, like the technology that powers me, is a tool created by humans to perform specific tasks. It doesn’t have consciousness, intentions or the ability to plan or make executive actions independently.”

Carter Frost, a UNM senior who is studying computer science, says he uses advanced AI extensively because “it’s quite useful for boilerplate stuff.”

It can write computer code and do other tasks that can easily be double-checked for accuracy, Frost says.

“Even when it’s wrong, it’s not mission critical, and I can just do it myself,” he says. “Ninety-five percent of the stuff it creates is correct or good enough.”

And while Frost says he doesn’t rely on ChatGPT to do his homework, he does use it to help with the intermediate steps of getting a project done, for example breaking a big job into smaller pieces – as long as there is no classroom ban on doing so. “It’s definitely saving me a lot of time,” he says. “It gives me time to work on maybe higher-level things… because a lot of the busy work has been taken away.”

Adrian Faust, also an undergraduate majoring in computer science, turns to AI for help in reviewing concepts he’s learned in the classroom. It can explain things that still might be fuzzy, he says.

And it’s that kind of potential as a “partner in learning” that makes Lo optimistic about a technological revolution. Say you’re out of your depth in a math class, he says.

The instructor has explained something to you several times, but it’s not getting through.

Before, “If I still didn’t get it, I didn’t get it and I’m stuck,” Lo says. “But (with AI), I could say, ‘Explain it to me like a 5-year-old.’ This tool is limited only by your own imagination, in some ways.”

Moses hopes that someday “everyone has an AI tutor who understands where they are and how to get to what they need to know.”

Students with a learning or other disability could receive specialized guidance to help them keep up.

“I can see it’s possible these tools could help students learn much better,” she says. “That’s much closer to possible than it was before these tools came out in the last year. Will we get there? I don’t know. It needs several pieces to fall in place.”

Ithaka S+R’s multi-university effort to get an academic handle on generative AI includes Ivy League schools, such as Princeton and Yale universities; large state universities like the University of Arizona and UNM; and smaller less well-known schools, such as Bryant University in Rhode Island and Queen’s College in Ontario, Canada.

The first step has been surveying faculty across each participating campus to “give us more insight into what’s happening locally,” Quinn says.

The UNM team is learning that some instructors are using generative AI to simply manage and respond to email. Lo, for one, does so, but he lets the recipient know when his response has a little artificial assistance.

Others are using it to “brainstorm concepts,” while some are “purposely using it in the classroom,” Quinn says.

What Quinn says he worries about is the effect on white- collar jobs across the economy, which are already being threatened by the capabilities of artificial intelligence.

“There are already places where AI does a good enough job for what we want,” he says. “That will mean disruption for many positions.”

One thing is certain, and that is universities will have to teach students to ethically and effectively use these tools as a job skill, no matter what field they end up in, several faculty members said.

“If you’re in the workforce, you need to know how to use this, or you’re not going to be very competitive as an individual, and also, your organization is not going to be,” says Laura Hall, a division head in the Health Sciences Library & Informatics Center and a member of UNM’s Ithaka team.

Says Lo: “People who know how to use AI will have a huge advantage. There’s a saying out there: ‘Humans are not going to be replaced by AI, at least in the short-term, but will be replaced by people who use AI.’”

Carter Frost

David Perkins

“Instructors may have to figure out other ways to assess learning.”

And if students are getting artificial assistance in doing homework, maybe they don’t need to learn whatever skills the homework is trying to teach, he says. “I think maybe this is a chance for us to rethink what education is. Maybe we use mental energy for something of a higher order.”

UNM as a whole, and certain academic departments, have started preparing for what’s ahead on the AI front.

For example, a new AI website — airesources.unm.edu/ — lists resources for students, faculty and staff. Ziedonis says he has budgeted $2.4 million to help Health Sciences begin preparing. And Moses is in an algorithmic justice group involving UNM and the Santa Fe Institute that seeks to “demystify algorithms and help everyone understand how they work in the real world,” according to the project’s website.

Moses also is writing to newspapers and speaking to legislators and other computer scientists about the coming implications of AI. She and several other professors spoke to UNM alumni on the topic at a Lobo Living Room session in September.

Such preparations are an initial step before the larger job of providing faculty support and training on how to use and teach the technology.

And that, Quinn says, will require money, although no one knows how much.

“Dollars for faculty to go to workshops, bringing people in to teach the tool in various disciplines, helping people overcome technological issues. I see a lot of training.”

Larger, wealthier schools are making big investments. The University of Southern California is spending more than $1 billion on a seven-story building that will house a new AI school and 90 additional faculty members. Oregon State University is planning a state-of-the art AI research center that will house a supercomputer and a cyber physical playground with next-generation robotics.

As for UNM, its contribution to the changing world might be its richly diverse student and faculty population, Quinn says. A recent New York Times ranking recognized UNM as No. 1 among the nation’s flagship universities when it comes to economic diversity.

“Having that uniqueness may allow for more voices we don’t hear from at a more elite university,” he says. “It may be if I talk about water, there are people who grew up… working around acequias or rivers or with farming. Those experiences can help come up with an idea using AI that we haven’t thought of before.”

Diversity is the touchstone for Davar Ardalan, a UNM graduate and founder of TulipAI, headquartered in southwest Florida. The company is using AI to find and produce audio sound effects, among other services.

Ardalan, a journalist and former National Geographic audio executive producer, says her work marries storytelling with artificial intelligence’s ability to cast a limitless net for content. Her company has a “strong commitment” to ethical practices that include making sure those who created the content are paid and bringing forward voices that are not often heard.

Although the future of AI is a daunting and sometimes scary prospect, it can provide sheer beauty that didn’t exist before, she says.

Ardalan and her sisters are creating an AI-generated collection of her mother’s writings, YouTube videos and other personal materials she produced before she died in 2020.

Ardalan’s mother, Laleh Bakhtiar — also a UNM graduate — was a renowned Iranian-American scholar who wrote, translated and edited more than 150 books.

The collection “will hold the body of her wisdom and allow a new audience to discover her. We can learn from her, even though she’s not here.”

As Ardalan seeks to unearth Native American and other lesser-heard voices, she is speaking out about the importance of teaching students how to use AI ethically and was part of a New York Academy of Sciences working group to prepare for what’s coming.

“This is one of the most profound technological moments of our generation,” she says. “We can form the creation of it.”

Spring 2024 Mirage Magazine Features

Understanding Headwaters

Understanding HeadwatersJan 7, 2025 | Campus Connections, Spring 2024 A $2.5 million grant from...

Read MoreNo Je or No Sé?

No Je or No Sé?Jan 7, 2025 | Campus Connections, Spring 2024 In his research, Associate Professor...

Read More‘A New Pair of Eyeglasses’

‘A New Pair of Eyeglasses’Jan 7, 2025 | Campus Connections, Spring 2024 Nancy López,...

Read MoreSenate Judiciary and Mental Health

Senate Judiciary and Mental HealthJan 7, 2025 | Campus Connections, Spring 2024 Colin Sleeper, a...

Read MorePlant Power

Plant PowerJan 7, 2025 | Campus Connections, Spring 2024 More people are choosing plant-based...

Read More